Nvidia Pulls Ahead in GPU Performance Race With New Processor

With an impressive target focus on high-performance computing and the burgeoning field of generative AI, the H200 Tensor Core GPU represents a significant leap forward in graphics processing technology. According to Nvidia, this new GPU takes advantage of cutting-edge memory and processing enhancements to provide power that far exceeds the capabilities of any previous GPUs. The H200 seamlessly outperforms its forerunner, the H100, almost doubling its efficiency and effectiveness. It boasts an extraordinary 141 GB of the latest HBM3e memory, which facilitates an incredibly fast 4.8 terabytes per second (TB/s) of data transfer speed.

Nvidia H200 Tensor Core GPU. Image used courtesy of Nvidia

The GPU cranks out 4 petaflops of eight-bit floating point (FP8) math, doubles the large language model (LLM) performance of the H100, and increases performance in (HPC) high-performance computing by 110X.

Hopper Architecture Underlies the New GPU

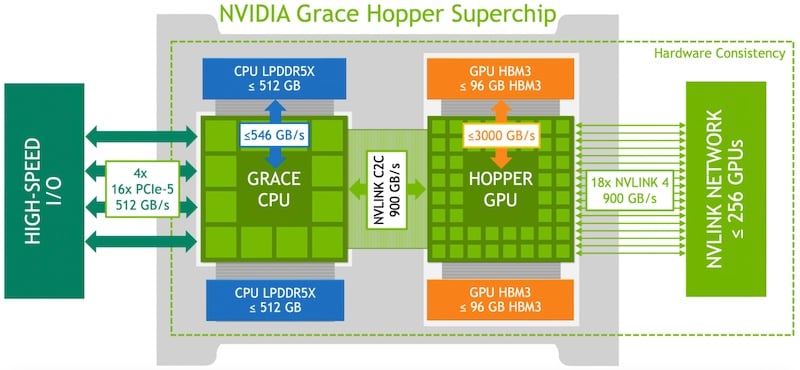

The H200 server systems are built on Nvidia’s Hopper architecture (named after Admiral Grace Hopper). Hopper features tensor memory accelerators (TMA). TMA improves memory architectures and supports bidirectional asynchronous memory transfer between global and shared memory spaces.

Logical overview of the Nvidia Grace Hopper super chip. Image used courtesy of Nvidia

The direct transfer increases the speed at which tensors can be processed and allows tensors up to 5D to be transferred. A tensor is similar in concept to a vector or matrix but can have additional dimensions and more complex formulae associated with each object and dimension.

H200 Blazes a Trail With HBM3e Memory

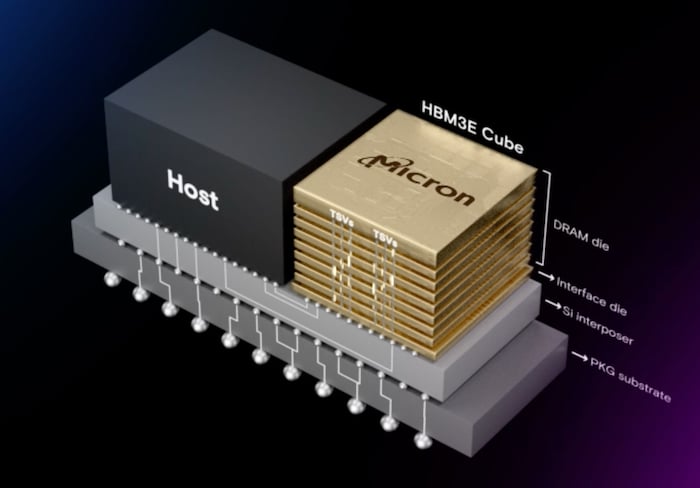

The H200 is the first processor that can use the newest high-bandwidth memory standard, HBM3e, which delivers more than 1.2 TB/s data bandwidth. It uses an eight-high memory dice stack sitting on an HBM memory controller and is closely located to the GPU via a silicon interposer. Each stack has a 24 GB capacity—a 50% greater memory density than the prior version.

HBM3e memory system. Image used courtesy of Micron

HBM3e offers the highest capacity near-chip memory in the industry. The stacked near-chip architecture delivers faster speeds with lower power consumption than conventional off-substrate memory. HPC, deep learning, and generative AI all push memory bandwidth and matrix math to their limits. By bringing faster memory closer to the processor, the HBM3e optimizes the processor’s computational capability.

The Role of H200 in Supercomputing and Beyond

Nvidia has been a leader in intensive computing since GPUs were first repurposed for supercomputers. GPUs are massively parallel chips that can perform many simultaneous math calculations quickly for graphics processing, blockchain, deep data analysis, and artificial intelligence. While Nvidia’s core market started in the gaming and high-end graphics world, its GPUs ended up being the right product at the right time for turn-of-the-century supercomputing and cryptocurrency mining.

While the underlying GPU architecture may have some commonality with gamer-oriented chips, the H200 is purely for use in supercomputers, such as the Jupiter computer being developed at the Forschungszentrum Jülich facility in Germany. This supercomputer uses quad Nvidia GH200 Grace Hopper super chip nodes. The system is projected to deliver one exaflop for HPC applications while consuming only 18.2 megawatts (MW) of power.

Other H200 Hopper systems will be installed for business-oriented LLM processing, where the total cost of ownership and energy conservation are important considerations. Nvidia asserts that H200/Hopper will factor heavily in the advance of generative AI over the coming years. The chip will be available in Q2 2024.